With its Einstein service, Salesforce mixes automated AI with custom data models developers can create for dealing with the specific needs of their customers.

Despite the white-haired personification it uses in marketing, Salesforce Einstein isn’t an AI assistant like Alexa or Cortana; instead it’s a set of AI-powered services across the range of Salesforce offerings (Sales Cloud, Commerce Cloud, App Cloud, Analytics Cloud, IoT Cloud, Service Cloud, Marketing Cloud and Community Cloud).

Some of these work automatically in the standard Salesforce tools, like SalesforceIQ Cloud and Sales Insights in Sales Cloud. Turn these on in the admin portal and you can add the Score field to a Salesforce view or use it in a detail page in a Lightning app to see lead scoring that suggests how likely a potential customer is to actually buy something, as wekk as get reminders to follow up with specific people to stop a deal going cold or see news stories that might affect a sale (like one customer buying out another).

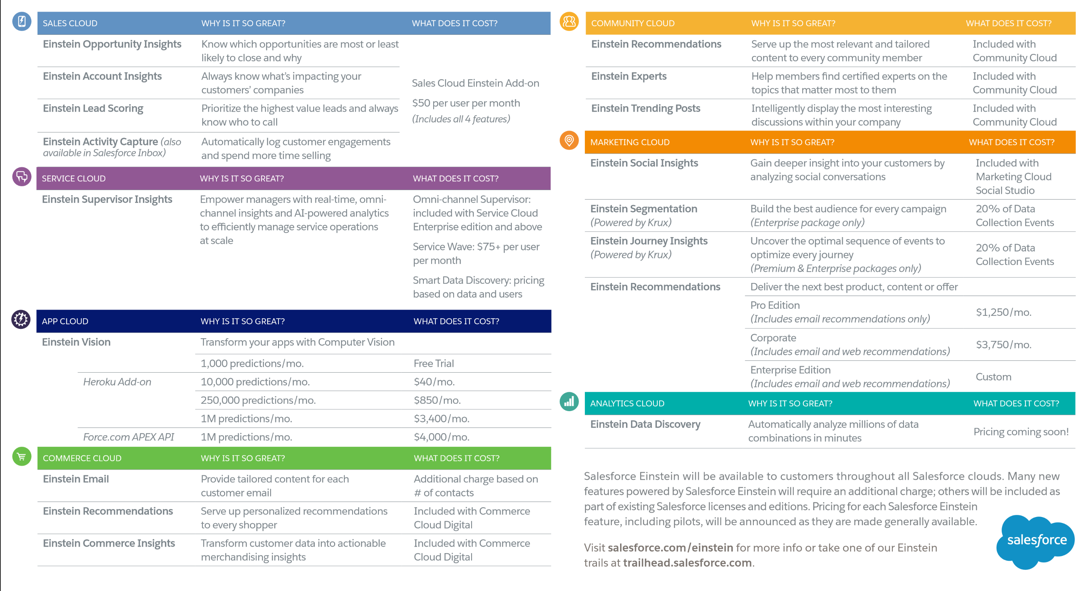

Einstein tools are (or will be) available for a range of different Salesforce cloud services (credit: Salesforce).

For the Marketing Cloud, Einstein provides similar predictive scores to suggest which customers will buy something based on a marketing email and which will unsubscribe when they get it. It also groups potential customers into audience segments who share multiple predicted behaviors and suggests the best time to deliver a marketing email. Service Cloud Einstein suggests the best agent to handle a case.

Commerce Cloud automatically personalizes the products on the page with product recommendation and predictive sort views (and you can customize that with business rules in the admin portal). Machine learning-based spam detection for Salesforce Communities is in private preview, learning from the behavior of human moderators what’s an inappropriate comment. Those scores and insights use the structured data that Salesforce stores in the CRM, for example when a salesperson marks an inquiry as a sales opportunity (as well as email data from Office 365 and Google that you can connect).

Prices and features for Salesforce Einstein AI services (credit Salesforce).

Because such a wide range of businesses use Salesforce, the same data models and algorithms wouldn’t work well across all of them. So the Einstein tools automatically build multiple models, transform the tenant data (which is stored in Apache Spark) and evaluate which models and parameter choices give the most accurate predictions for each new customer — so one Salesforce customer might have data that can be best analyzed with a random forest algorithm and another might get better results with linear regression.

That’s all automated in the Salesforce platform, down to being able to detect what language your customers are talking to you in. Your business needs to be using Salesforce enough to create sufficient data for it to learn from; for predictive lead scoring that means at least 1,000 leads created and 120 opportunities converted to sales over the last six months, at a rate of at least 20 a month. The more information the sale team puts into those records, the more accurate the lead insight is likely to be. In fact, before you can turn on Sales Cloud Einstein, you can to run the Einstein Readiness Assessor, which builds and scores the models to see if there’s enough data to generate useful predictions and if the predictions are going to help their business.

Machine Learning for All (According to Their Needs)

“We evaluate customers who are interested in Einstein based on the size and shape of their data and make recommendations based on how useful it will be to them,” Vitaly Gordon, vice president for data science and engineering on Salesforce Einstein said at the most recent TrailheadDX, which is the company’s developer’s conference.

There are some companies where 50 percent of their leads become opportunities and for others, it’s only 0.1 percent; knowing who to target would be very useful for the second kind of company but even a very accurate prediction about lead conversion wouldn’t make much difference to the first business, he pointed out. “Sometimes it’s more about anomaly detection, which needs a different set of algorithms,” Gordon said.

And if you only have ten leads a day, just call them all; “AI is not a ‘one glove fits all’ tool and not every problem needs AI,” Gordon said.

To help customers trust these automated machine learning systems, Salesforce shows why a particular lead has been scored high or low. “We explain why we believe a lead will work; we explain which models are influencing the score and what in the data is signaling that the opportunity will convert,” Gordon said.

Those scores also give you the expected accuracy of the prediction. “We say we think this is the right next step, with say 82 percent or 77 percent accuracy, so it’s like a guidepost,” Gordon said.

Those customer-specific models also get updated automatically as new data comes in, and a percentage of the data is reserved for on-going testing as well as training — so Einstein can track the accuracy of predictions and spot changes to your data that mean a new model is needed. That would show up in predictors used to explain the score, which should also help users to feel comfortable about the predictions. The systems also look for “leaky features”; predictions that are too good to be true, because they’re predicting a combination of events that will never actually happen.

For developers, Salesforce has three machine learning APIs for building custom models using deep learning on unstructured data: for vision, sentiment and intent. The Einstein Object Detection vision API is now generally available; the Intent and Sentiment APIs are in the preview.

Training the convolutional neural network behind the Object detection API can be done by zipping up folders of images in each of the categories you want to recognize (the folder names become the feature categories) and uploading those to Salesforce. You see 200-500 images per label, with a wide range of examples and a similar number of examples per label.

That creates an endpoint that you can send new images to, to detect objects, like a pair of shoes or a pair of pants, and it can also classify the image by counting how many pairs of shoes and pants are in the image and what color they are, or recognizing that a shelf is empty and needs to be restocked with products.

Image recognition might help make lead scoring more accurate; Someone installing solar panels could use the API to look at the address on Google Maps and see whether the roof type is suitable for panels.

The API can also be used for anomaly detection; if employees are uploading a large number of images, they can use image classification to suggest any that might not be relevant to the task. Image recognition doesn’t yet extract text from images using OCR, but Salesforce is working on this.

Each recognition comes with a certainty percentage. The API automatically reserves some of the training data for testing, and developers can see the test and training accuracy scores and a confusion matrix for the data model in the Einstein API playground. They can determine if they need more data for training — perhaps by labeling false positives and negatives for it to learn from.

Developers can add a button to the interface in the app to let customers or employees mark images that have been recognized incorrectly. As this is a custom model, developers will need to evaluate the accuracy over time yourself; the system won’t warn you if the accuracy scores are falling.

The Intent API tries to extract what a customer wants from the text of their messages. The API can be trained by uploading a CSV file with two columns, one for the phrases customers are likely to use (like “I can’t log in” or “my bike wheel is bent”) and the labels those phrases represent in your process (like “password help” or “customer support”).

For accurate predictions, Salesforce suggests over 100 phrases per label, and this is an asynchronous training phase, so it will take a little time before it’s ready to call the API. Currently, the API only looks at the first 50 words of every message it scores, although that will be extended. It’s a good idea to have a mix of short and long phrases to avoid false correlations from a few examples of longer messages.

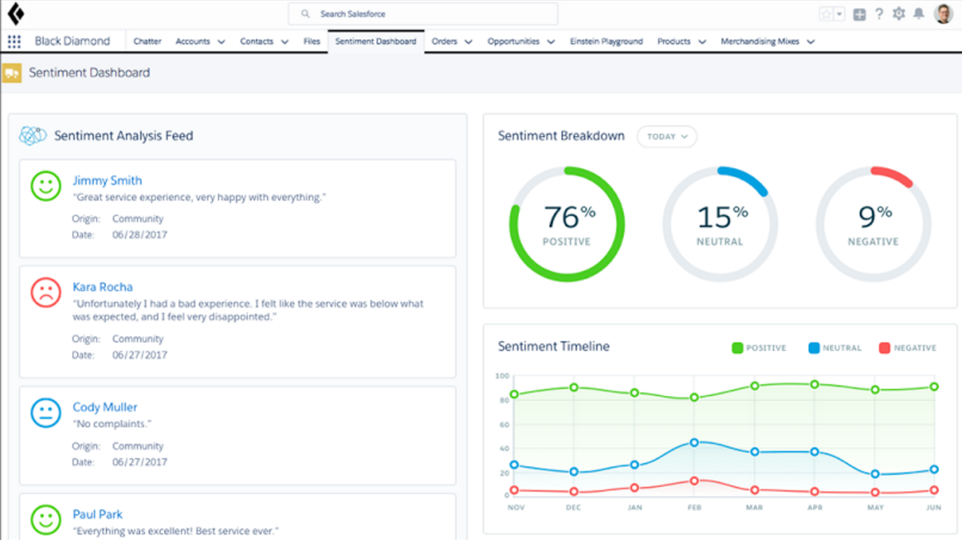

Intent in conjunction can be used with the sentiment API to tell when a customer is unhappy, or it could be used keep an eye on customer communications generally to see if they’re positive, negative or neutral. Again, sentiment is a pre-trained model but uploaded data labels like product names or specific positive and negative terms make the sentiment fit your domain better. There’s a limit of 1GB per upload to all the APIs, but you can make multiple uploads.

The Einstein Sentiment API classifies the tone of text — like emails, reviews and social media posts — as positive, neutral or negative (credit Salesforce).

Salesforce is working on several other AI tools. Heroku Enterprise supports the Apache PredictionIO open source framework (with Kafka as a Heroku service for streaming big data to Heroku) and Salesforce is creating a wrapper to make that easier to build your own custom intelligent apps using PredictionIO.

There’s also integration with IBM Watson APIs. Salesforce Chief Product Officer Alex Dayon explained these as being different layers of data.

“Einstein is in the Salesforce platform using Salesforce data. Watson is a separate set of libraries and data sets, like weather prediction data, and we want to make sure that the data sets can be shared and that Watson can trigger Salesforce business processes,” Dayon said. When using Watson predictive maintenance, that service could send an alert that a piece of machinery was going to have a problem, and Salesforce Field Service could automatically dispatch a technician.

“We’re trying to make sure that Einstein can tap into data sets coming from other platforms,” said Dayon.

Feature image by Seth Willingham on Unsplash.