- Home

- >

- DevOps News

- >

- Removing the Roadblock to Continuous Performance Testing – InApps 2022

Removing the Roadblock to Continuous Performance Testing – InApps is an article under the topic Devops Many of you are most interested in today !! Today, let’s InApps.net learn Removing the Roadblock to Continuous Performance Testing – InApps in today’s post !

Read more about Removing the Roadblock to Continuous Performance Testing – InApps at Wikipedia

You can find content about Removing the Roadblock to Continuous Performance Testing – InApps from the Wikipedia website

Wolfgang Platz

Wolfgang is the founder and chief product officer of Tricentis. He is the force behind software testing innovations such as model-based test automation and the linear expansion test design methodology.

You can’t afford to have a new feature, update, or bug fix bring you two steps forward and three steps back. The new functionality must work flawlessly — and it can’t disrupt the pre-existing functionality that users have come to rely on. This is why you need continuous performance testing.

Unfortunately, it’s all too easy to break something when different teams are evolving different components built on many different architectures, at different speeds, all in parallel. But users and stakeholders don’t care that delivering good software is hard. A software failure is now a business failure, and any type of problem affects operational efficiency, customer or employee satisfaction, revenue and competitive advantage.

Continuous Testing, Partly

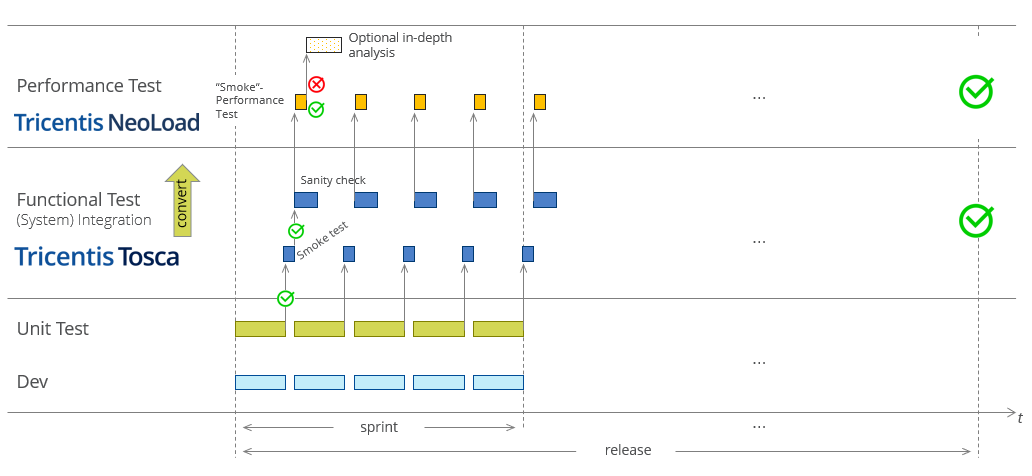

Most organizations are doing a fairly good job exposing the most glaring functional issues by integrating essential tests into the continuous integration/continuous delivery (CI/CD) pipeline. They rely on “smoke tests” to check that the application won’t flat out crash and burn if it progresses through the delivery pipeline. This includes unit tests plus a select set of functional tests that step through critical use cases from the perspective of the end user. The smoke tests are typically supplemented by a somewhat broader set of tests, providing peace of mind that core functionality still produces the expected results. These sanity tests aren’t practical to execute with every build, but they can usually be run overnight.

With the combination of continuous smoke tests and regular sanity tests, you usually get adequate warning if functionality is fundamentally broken. But what about performance issues? Why not check for them as well? After all, if users get frustrated by delays and leave your application before completing a process, it doesn’t really matter if the process could have produced the correct result in the end. It didn’t, because the users never made it that far.

However, while functional testing has become widely accepted as a core part of the CI/CD process, performance testing tends to lag behind. It’s a late-cycle activity, as it’s been for years, ever since waterfall development cycles. From a quality perspective, this doesn’t make any sense. Both functional correctness and performance characteristics like scalability, responsiveness, and availability are vital signs. It’s like continuously monitoring an ICU hospital patient’s pulse but checking his respiration rate only once a week.

Why hasn’t the progress we’ve made in one area of testing been carried over to the other?

Performance Testing and Functional Testing

At the root of the problem is the fact that functional tests and performance tests are fundamentally different. Even if you want to test the same core set of use cases that your smoke tests cover, you typically need to create, and maintain, a totally different set of tests for performance testing. These tests are written in a completely different way, using different tools, often by different people, to access the application in a distinctly different way.

UI-based smoke tests exercise the application just like a human would, simulating interactions with the controls on the screen. It’s 100% from the user perspective. These tests can be created with UI testing tools that allow test automation to be created intuitively. Performance testing, however, is usually performed by accessing the application through the network layer. The process is much less intuitive. Moreover, the network layer is extremely volatile, and this volatility means that performance tests need attention and tuning for virtually every test run.

Just developing a separate set of tests specifically for performance testing is not easy. But keeping them in sync with the evolving application is a never-ending chore. Most teams struggle to find the time and resources required to keep a tight suite of functional tests maintained. Keeping performance tests up to date is even harder. They’re even more unstable than UI tests, which are infamous for their instability. They’re also more tedious to update, since you’re working “under the hood” at the technical protocol layer instead of at the intuitive UI layer. That’s why performance tests are typically the domain of dedicated performance test engineers, who often operate performance testing as a shared service. Most sit outside of cross-functional Agile teams and aren’t readily available for routine performance test maintenance.

Getting the core performance test scripts updated at the end of each sprint is already a stretch for the vast majority of teams. Actually getting them updated for in-sprint execution? That rarely happens.

One Test Definition, Infinite Possibilities

But just imagine what you could achieve if your performance tests didn’t lag so far behind.

A developer checks in an incremental change, then the functional test runs. If that test succeeds, you can assume two things. One, the corresponding application functionality works as expected for a single user. Two, that functional test has been properly maintained, which means it could be run under load for performance testing, if only functional tests could be reused for performance testing.

The corresponding performance test then executes. The team knows immediately if the latest changes negatively impact the user experience in terms of functionality or performance. They don’t need to wait weeks or even months for performance test engineers to alert them that one of potentially hundreds or thousands of changes caused a performance problem. They don’t have to waste all sorts of time hunting down the source of the problem and trying to unravel the issue days, weeks or even months after it was introduced. Plus, they can immediately stop that build from progressing along the delivery pipeline.

Well, good news. It’s entirely feasible with a product called NeoLoad. NeoLoad was created by Neotys, and I’m super excited to welcome the product and the company to the Tricentis family.

NeoLoad takes functional tests and flips them into performance tests with just one click. That includes tests built with Selenium, Tricentis Tosca and other functional testing tools. This means that you don’t need to maintain two separate sets of tests for performance testing and functional testing. Just keep your functional tests up to date, and you’ve got exactly what you need. No additional test creation or maintenance is required. With all the time and effort saved, performance testing within CI/CD suddenly becomes feasible.

With this approach, performance specialists are relieved from creating basic performance tests. Instead, they can focus on examining any issues found and completing more advanced performance engineering, creating sophisticated workload models, profiling to fine-tune the application and so on.

Getting performance testing from your existing functional tests is quite the value-add. It’s a lot like tagging along when a close friend is visiting his native country. You don’t need to worry about extra planning and preparation. You don’t even need to learn a new language. You can just benefit from being along for the ride.

InApps is a wholly owned subsidiary of Insight Partners, an investor in the following companies mentioned in this article: Neotys, Tricentis.

Lead image via Pixabay.

Source: InApps.net

Let’s create the next big thing together!

Coming together is a beginning. Keeping together is progress. Working together is success.