- Home

- >

- Software Development

- >

- Intel Unveils Next Generation Neuromorphic Computing Chip – InApps Technology 2022

Intel is bolstering its neuromorphic computing ambitions with the second iteration of its Loihi processor and an open framework that the company hopes will accelerate the development of software for the emerging space.

The second-generation Loihi 2 processor, introduced Sept. 30, offers significant improvements over its predecessor in such areas as performance, programmability, deep learning capabilities and energy efficiency. However, just as important as the launch of the Lava software framework to drive the development of applications and fuel the growth of a community around neuromorphic computing.

“Software continues to hold back the field,” Mike Davies, senior principal engineer and director of Intel’s Neuromorphic Computing Lab, said during a press briefing about Loihi 2 and Lava. “There hasn’t been a lot of progress — not at the same pace as the hardware — over the past several years and there hasn’t been an emergence of a single software framework, as we’ve seen in the deep learning world, where we have TensorFlow and PyTorch gathering huge momentum and a user base.”

Neuromorphic computing is among a number of novel computing architectures that include quantum computing and deep learning. The hardware and software are designed to model systems in the biological brain and nervous system and are aimed at driving energy efficiency, computational speed, and capacity in a range of uses, from vision, voice, and gesture recognition to robotics and search efforts. It can make artificial intelligence (AI) capabilities more accessible to a wider audience.

Intel, IBM Lead the Way

Intel is among a broad array of chip makers big and small that are developing silicon for neuromorphic computing. Intel’s Loihi and IBM‘s TrueNorth are among the most well-known the neuromorphic computing chips, though other vendors — from established players like Qualcomm and Samsung to smaller companies like BrainChip and Applied Brain Research — also are looking for traction in a global market that Verified Research predicts will grow from $22.06 million last year to $3.5 billion by 2028.

Intel in 2018 launched its Loihi processor, a research chip designed to test the architecture and assess its value. It’s new computer architecture, with several key differences from conventional chips. There is no off-chip DRAM or memory space with cache hierarchies that all processors and cores have access to. The memory instead is tightly embedded in the individual cores and dispersed into small memory banks within the cores, similar to how synapses are widely distributed throughout the brain, Davies said.

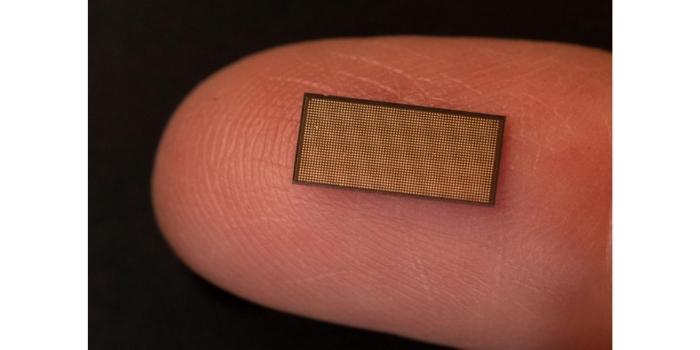

Intel’s Loihi 2 neuromorphic chip on the tip of a finger.

Information, coding, and processing are happening in a highly asynchronous manner – again, similar to the brain, where pulses of voltage called “spikes” mediate the transfer of information between billions of neurons in a highly efficient manner. With the Loihi architecture, computation is performed in an event-based way, activating circuits and using spikes to trigger the processor.

Intel engineers have found encouraging results in such areas as power efficiency, performance, and latency for uses from gesture recognition and learning and adaptive robotic arm control to scene understanding and odor recognition and learning, Davies said. However, Loihi also had limitations, including insufficient neuron flexibility, limited support for the latest learning algorithms, performance bottlenecks and integration issues with conventional computing architectures.

Enter Loihi 2

Loihi 2 delivers improvements over its predecessor, including 10 times faster processing and 15 times more resource density — due in large part to 3D scaling — with up to 1 million neurons per chip, eight times more than Loihi. In addition, the neurons are programmable and generalized spike messaging, improving Loihi 2’s deep learning capabilities. The allocation of internal memory banks in Loihi was fixed. With Loihi 2, the internal memory banks in each core can be allocated at different stages of the pipeline.

The new chip also can more easily integrate with conventional processors, novel sensors, and robotics systems. Leveraging a pre-production version of the Intel 4 manufacturing process, engineers were able to use extreme ultraviolet (EUV) lithography to simplify the layout design rules to accelerate the development of Loihi 2.

Density Is Key

Resource density is a key issue in Intel’s Loihi design. Davies noted that without off-chip DRAM, system designers need to use more chips to scale to address large problems. Intel created a system called Pohoiki Springs that used 768 Loihi chips to address such challenges as similarity search that could be addressed by conventional methods using a single chip.

“Getting to a higher amount of storage neurons and synapses in a single chip is really essential for the commercial viability [and] economic aspect of neuromorphic chips and commercializing them in a way that makes sense for customer applications,” Davies said.

The Intel 4 process enabled Loihi 2 to have 128 cores, each with about the same amount of memory as the previous generation, but on a die that is 1.9 times smaller.

Intel is offering two neuromorphic systems leveraging Loihi 2 via the Neuromorphic Research cloud to members of the Intel Neuromorphic Research Community (INRC). Oheo Gulch is a single-chip system designed for early evaluation and cloud use. Kapoho Point is a stackable eight-chip system that comes with an Ethernet interface that will be available soon.

Lava Comes Flowing in

Like Loihi 2, the Lava software framework is a research tool rather than a completed product, Davies said.

“We’ve taken all the lessons learned from these past three and a half years of software and application development and we tried to architect the system that we think can support the full broad range of algorithms and applications and software approaches that the community that’s using Loihi — and now Loihi 2 — are trying to support and trying to explore providing a common denominator framework that is built on a foundation of event-based asynchronous message passing, which is ultimately the model that applies to these spiking neurons, as well as to the more conventionally coded processes that exist in conventional ingredients in the overall system architecture,” he said.

Flexibility is a key part of the framework. Applications developed via Lava will be able to run on a conventional CPU without access to Loihi 2 or neuromorphic computing hardware. However, as users get access to Loihi 2, they can map portions of the workload designed for the neuromorphic architecture to the chip, which Davies said will lower the barrier to entry into the neuromorphic computing field “because you don’t have to deal with the evaluation process and the headaches that go with using research involving chip architecture.”

An Open Framework

It’s also an open framework with permissive licensing.

“This is a project that we hope to invite many others to come and contribute, to carry it forward in the way that the community sees our best,” he said. “People will even put this onto other platforms and that’s completely fine as far as we’re concerned. We see a need for convergence and communal development here towards this greater goal, which is going to be necessary for commercializing neuromorphic technology in general by anyone.”

This framework will help build a community around neuromorphic computing the way TensorFlow and PyTorch have done with deep learning, he said. Right now, even though Intel has made gains in its work with Loihi and its own software, there has been little sharing among developers of the code that’s been used to create these applications.

“It makes it hard for other groups to build on the progress and the results that others are making,” Davies said. “And it’s hard to now get to a point where these can all be composed into larger-scale applications. … The whole of that application is greater than the sum of just the individual parts and we can’t even really create the whole itself with the current software tools. This has been a big problem.”

The end goal of all of this is to mature and commercialize general-purpose neuromorphic computing to the point where it can sit alongside more established chip architectures and run workloads suited for the systems. Such workloads include anything that has to respond quickly under a power budget, such as mobile or edge applications, he said.

The architecture also will have use in data center environments, addressing hard problems — Davies used the example of railway scheduling — more quickly than conventional approaches.

[sociallocker id=”2721″]

List of Keywords users find our article on Google:

| neuromorphic chips |

| neuromorphic |

| loihi 2 |

| mike davies intel |

| “brainchip” |

| brainchip |

| neuromorphic chip market share |

| traction hierarchies |

| neuromorphic computing market |

| neuromorphic chip market |

| deep ultraviolet |

| neuromorphic chip market growth |

| next generation cpu |

| neuromorphic computing |

| intel process engineer |

| generation intel |

| hospitality technology next generation |

| intel next generation cpu |

| intel loihi |

| ibm wikipedia |

| intel product development engineer |

| intel core processor wiki |

| computer technology wikipedia |

| intel core processors wiki |

| intel software engineer |

| intel resource design center |

| neuromorphic ui design |

| architecture lead generation services reviews |

| lava mobile software |

| deep learning with spiking neurons opportunities and challenges |

| intel cloud computing |

| next intel chip |

| global neuromorphic chip market |

| intel framework |

| intel t meaning |

| difference in intel generations |

| current intel generation |

| brainchip -neuralink -musk |

| neuromorphic design |

| embedded computing design |

[/sociallocker]

Let’s create the next big thing together!

Coming together is a beginning. Keeping together is progress. Working together is success.