This year has been a good one for robots in the epic battle of Man vs. Machine. It’s been decades since the first computer beat a chess champion, but the ancient Chinese game of Go — which supposedly has more possible moves than there are atoms in the universe — had always escaped the robot’s grasp.

At least until Google’s AlphaGo took four out of five games against the reigning human world champion. How’d AlphaGo do it? Well, basically it taught itself. Google’s DeepMind artificial intelligence subsidiary spent the last two years developing this database of 100,000 human-played rounds of Go which it fed into AlphaGo which then played against itself millions of times, using machine learning and neural networks to improve until it was finally the victor.

Sound a bit heady? Well, it is. But then when you take that machine learning and artificial intelligence to the next level of deep learning, well, your neurons take a hit. At least that’s what I thought when I sat down for Roberto Paredes Palacios’ talk at the PAPIs Connect predictive API and machine learning conference. The Polytechnic University of Valencia professor’s talk tried to demystify deep learning; some of that clarity we’ll hopefully now pass onto you today.

So what is deep learning? Some of its other names give a bit more insight; it’s also called deep-structured learning, hierarchical learning or deep machine learning. As these suggest, deep learning is about taking machine learning from various sources and then reorganizing and restructuring it.

“Deep learning means we are going to stack lots of layers, and we are going to build very deep models,” Paredes Palacios explained.

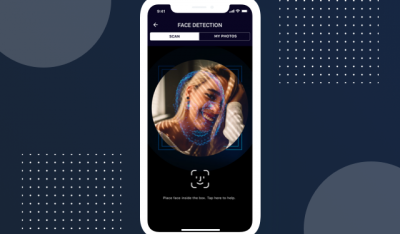

It’s this level of deep machine learning that is making true machine-based voice, image and pattern recognition finally possible. He says that “Deep learning bridges the gap between raw pixel representation and categories.”

Of course, as Paredes Palacios said, “There’s a bag of things known to be deep learning but aren’t that deep.” He says deep learning is when you take the raw representation of data and interpret it into categories, with ten to twenty layers, which not only flow in one direction but also back-propagate to check for mistakes.

What is Deep Learning?

The path to deep learning has evolved through the following phases:

- Rule-based systems: We took inputs and hand-designed programs that created outputs.

- Classic machine learning: We build on the rule-based system by mapping from the hand-designed features.

- Representational machine learning: Input creates learned features which are then mapped to conclusions, that then reach an output.

- Deep learning: Finally inputs create learned features which then become learned complex features, which are finally then mapped from features to a certain output.

Oh, and of course if you follow the thinking of Bill Gates, Stephen Hawking and Elon Musk, artificial intelligence can best be defined as scary. But let’s just follow Alan Turing’s lead and see where we can take these new levels of deep learning.

Why Should Business Care About Deep Learning?

It does sound expensive and complicated, but the value of understanding the value of deep learning is invaluable. Deep learning is what makes big data more than a buzzword. Machine learning and its deeper cousin are what will give you competitive insights into your customers and then allow you to turn that into drilled-down campaigns. Deep learning has a computer learning patterns and recognizing habits that take millions in market research at which to make educated guesses.

Paredes Palacios offered four reasons when deep learning is a good answer for the more data-centric of us:

- You have a problem if there is a big gap between the representation that you have and the target you want to predict. It’s a good idea to let the network learn how to bridge this gap.

- When hand-crafted features don’t work.

- There are a lot of data for training for visual distortions.

- When you can affordably access the hardware to run it on because it takes a lot of memory and processing.

In general, he says deep learning is useful if “it’s something that’s old but now needs to be trained.” We’re not talking about teaching old dogs new tricks here, but rather revamping old processes to gain newer, deeper insights.

For example, Natalie Busa, data platform architect at ING, said at the conference that the Dutch bank is currently applying the classic machine learning approach to give personal banking and small business customers better insight into their finances and spending habits. The financial institution’s next step is toward representational machine learning, creating a hierarchy of features describing other features in a way that creates a deeper learning that allows for continually more precise fraud detection.

Busa says that enterprise data science has to start lean, something that predictive APIs allow you to do by giving you access to the complicated insights of machine learning, artificial intelligence, and even deep learning without bearing down on the cost of creating intense physical and digital infrastructure.

He says that data science must deliver an outcome. It’s not just about delivering a model; it’s about asking: How can you quantify that model?

- What’s the improvement?

- Can you quantify your model quality?

- Can you quantify impact?

- What is the impact on the customer journey?

- Can it be converted into financial results?

Paredes Palacios points out that lean is also increasingly more accessible regarding affordability. Talking about specifically representational learning, he said that “The good news is that we are able to learn using these models, thanks to the GPU, which is a kind of high-performance computing computer chip specializing in mathematical computation. This is particularly the case for natural language processing (NLP) and computer vision when the GPU enables you to apply a deep learning image over a network. He said the most common of these neural network approaches include:

- Deep neural networks for multilayer perceptron with more layers.

- Word2vec for NLP and natural word embedding.

- Auto-encoders and de-noising Auto-encoders for multi-layer perceptron and extracting and composing robust features.

With advancements in deep learning like this, Paredes Palacios said, “Now we can solve a problem, and now we can move to other things like using fewer data, reformed learning, active learning and so on,” allowing businesses and especially their data scientists to enact machine learning once and then focus on improving upon it.

Feature image via Pixabay.

InApps is a wholly owned subsidiary of Insight Partners, an investor in the following companies mentioned in this article: turing.